“Over My Head – Live” (Bluegrass Live Edition) by Judah & the Lion, Jerry Douglas, and Dan Tyminski.🎵

I love re:invent announcements.

Because of how obnoxiously loud I’ve been (Reddit has been far worse) about how “making more clusters is not the goal,” a few people who saw the phrase “Cluster Vending Machine” at re:invent brought it up to me. And by few I mean at least 10 of you. “Molly! Are you going to write about the cluster vending machine?!?!!!!!”

Hi EKS team. Love you.

First, I am actually excited about the “Cluster Vending Machine” announcement from EKS but it’s not the one I’m most excited about. The inclusion of Cluster Access Management and EKS APIs in the re:invent “The Future of Amazon EKS” talk are far more important and around the corner. Also the “Cluster Vending Machine” bullet (1) was for a 5 year plan which is probably how long it would take to do that well and (2) I don’t exactly know what that product is – I assume The EKS team desires to hear everyone’s voice on the real challenges with Kubernetes in Platform Engineering in order to make a good one. That’s what this is. What I know for certain:

Provisioning new clusters is not the hardest problem.

Infrastructure Platform Engineering is a Paradox

Let’s start with AWS’s COPE team theory to get to the root cause. As part of the Operational Excellence pillar of the “Well-Architected Framework,” there is a page on “Centralized governance and an internal service provider consulting partner.”

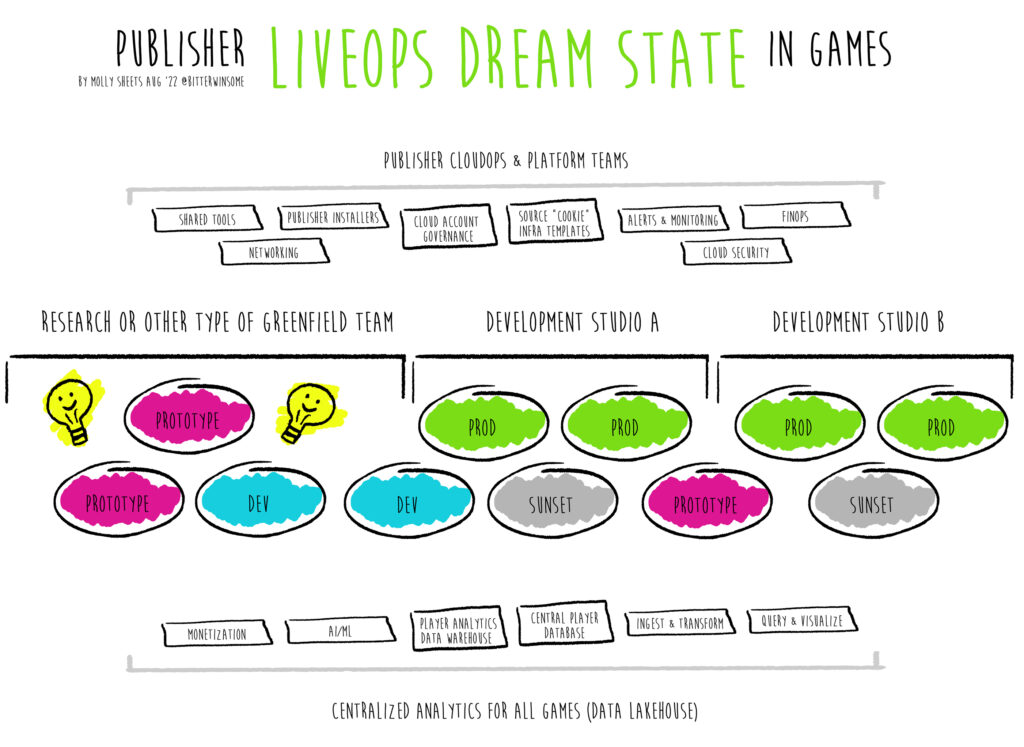

The above link proposes COPE theory – Cloud Operations and Platform Enablement – and has been around for years. This idea is that as teams mature they eventually build centralized structures which own standards. That centralized structure includes platform engineering and consulting engineers. Many large organizations deploying infrastructure operate this way today. It may be called CloudOps, It may include DevEx, it may be called DevOps, DevSecOps, NoOps. It could include observability, networking, and security. No matter how a team is re-named and how many times we shove the word “Ops” at the end of it, all of these problem spaces fall under COPE. It’s one centralized group trying to hone in on specific guardrails at the left, front, back, right side of SDLC (software development lifecycle) pipelines – what they want to own, what they don’t, and what their customers are willing to do.

But there’s a 5th phase, “Decentralized governance” which implies that some companies will get good enough at this chaos to fully decentralize and only the platform engineering team exists putting most of the customization work on their internal customers with now managed services components. I’ve left the fantasy land where I used to believe that was possible to 100% achieve. I now believe it lands at 10 years of re-orgs almost everywhere and isn’t an achievable state of permanence.

Joking aside, the right model is absolutely chaos – There are some who willingly do not make enough time for maintenance (or as some call it technical debt). In organizations that have fully decentralized teams with a tiny platform engineering team, this implies that all those customers are actually going to own maintenance OR not be upset when maintenance is done automatically for them.

YALL KNOW THAT IS NOT TRUE. 🙂

Tell me of a time someone in your organization did not get upset or ask for an extension because they were behind on updating some legacy product for which they were given 50 million warnings to update it and then tried to deploy to stage and everything flippin’ broke. Sorry I only asked for one time. You did not need to give me 20 examples. Deprecation is a shared pain across this industry for which we would all host charity marathons for if it didn’t make so many people want to drink trying to get things deprecated.

Vending More Clusters is Not the Way to Goal a Company’s Internal CloudOps Business

Provisioning Kubernetes clusters, centralized or not, is not the most time consuming part of running Kubernetes. Calculating number of clusters is a great metric to have for adoption initially, but more clusters, does not 1:1 match to more customers and falls apart with growth.

Whether a team uses Terraform, AWS CloudFormation, Pulumi, or AWS CDK the most time consuming part of provisioning, hands down, is making sure a customer can get an AWS account in an organization in the first place.

The 2nd most time consuming part, because it often puts people back into phase 1 of cloud maturity “The fully separated operating model,” is have the networking setup and permissions to provision the cluster.

If teams manage to argue enough through this to make that self-service (queue, vendors sending me a bunch of examples where a VPC is created for you in a third party UI), then teams may also build some way to template the provisioning of a cluster – but both don’t have to be templated. Those three provisioning problems (account, networking, cluster) can co-exist as separate “vending machine” exercises. Provisioning a cluster is likely a lot easier to template than the other two. This is especially true right now while everyone worries about IPv4 vs IPv6.

This is all without using a 3rd party abstracted cluster vending tool already to do it for teams because the CNCF exploded with companies who tackled just the above three spaces thinking provisioning was the easy win for go to market. Fancy UIs does not make it better because doing it via a UI or doing it via the CLI still doesn’t solve for the people and ticketing problems – arguing through who owns what responsibility until you get to self-service makes it better. Let’s say you are past this though or in a good spot where provisioning all of these things takes less than week for a customer. Then what? The COPE team is… done?

No. If they were done, then during an incident they’d never be roped in. Done implies everyone knows how to use Kubernetes well and the applications run well on it. Even Kubernetes teams are still learning how to do Kubernetes well.

That’s the problem. Is the goal for a company to create a bunch of clusters and ignore them? It is by far not. Is it cheaper and operationally easier to have more clusters or less clusters and more people using them? If a cluster is shared between a COPE team and an application team, who defines access for which part of the applications, daemonsets, agents, cron jobs, tagging, etc for hosts? How does the on call model look?

How do they define said access?

Shared responsibility?

Who writes the policies as code and all the guardrails?

For. each. product. on. it?

Best Practices for Applications in Kubernetes is the Hard Part to Vend

I mentioned that provisioning is not the hard part for which a customer would truly adopt a cluster vending machine and it’s the third blog post where I’ve said this which is why after re:invent people asked me to write about it.

I think EKS already knows that provisioning clusters is not the hard part because they are a brilliant team – but there are several AWS customers in the world who still believe provisioning clusters is the hard part and many third parties who signed up their business for that problem. It is only one problem that takes a small amount of time compared to living in Kubernetes every day as an application engineer. Isolation between the COPE team and the application team is by far is the hardest part of Kubernetes to make self-service and where the most pain and time is spent.

This is because in Kubernetes you can isolate via node pools, via namespaces, and RBAC – and the many reasons you would want to do so are numerous – everything from compute testing, to application releases, to types of workloads running on a cluster. It would take a product manager dedicating their full time to each of these isolation aspects and then self-servicing each of these problems if they were worth it and could be standardized. It would also take agreeing on when to use different namespaces (team? release? Environment?), how you want to structure your teams against those namespaces and node pools, and then trying not to change these, or designing the self-service model as if they could or would constantly change.

Newly adopting customers are also not necessarily aware of best practices for applications in Kubernetes and have to be trained. This is true whether guardrails exist for customers or not (You’re either training on the why a guardrail exists or you are training on the absence of the guardrails). At the same time, COPE teams have to still continue to build guardrails that are not so restrictive that customers can’t do what they need. Meanwhile, the end user, the customers of both the COPE team and the application teams may evolve in their use cases, which changes the requirements of the application teams.

As applications teams are enabled to become more independent, or rather “more decentralized” the problem actually gets harder. For example, the more an application team is enabled to focus on only their applications and only their stack, the more they have to trust the COPE team’s access and responsibilities and care about best practices of Kubernetes less. They have to let COPE do their job – scale the workload underneath, respond to incidents, understand the shared workload. You know, kind of how like we all have to trust AWS managed services.

Because of these reasons it is almost impossible to get to a fully decentralized model as the Platform Engineering team is constantly learning from its customers and its customers are always wanting more access to do new and innovative things.

This is the framework and foundations for why I would argue that a “cluster vending machine” wouldn’t be worth having in EKS unless it ALSO tried to take a stab deeply at these problems for example, self-servicing namespaces, application templates, suggestions for replicas, suggestions for pod disruption budgets, priority class enforcement, pool visibility and suggested taints and tolerations, pod topology constraints — that can be defined by a COPE team and application teams together for standard workloads if the plan were to reuse them – to name a few.

I looking forward to the next 5 years because I have faith we’ll get somewhere interesting, and I have faith in the people who work in this space both in my teams and in the partners I am grateful to work with everyday – it will certainly be fun the whole time and never boring.

It’s a flippin’ hard problem to create a good vending machine for Kubernetes. It requires everyone to care a whole lot about everything but the cluster itself.

Image Credit(s): Header image from Unsplash by Stéphan Valentin. “Man in front of a Vending Machine” image from Unsplash by Victoriano Izquierdo.