Nothing lasts forever. And [Pandora’s Cluster] is going to take us down. 🎵

I read a resume of a friend at a AAA publisher – I’ve changed the numbers so readers can’t reverse engineer it. The company had 300+ clusters but less than 3000 nodes. I pushed my laptop away. I put my face in my hands. I don’t judge. I don’t know the situation. Infrastructure jobs are hard. I imagine in my wildest dreams what it’s like over there. The world this leader left. Strictly, from one bullet of math.

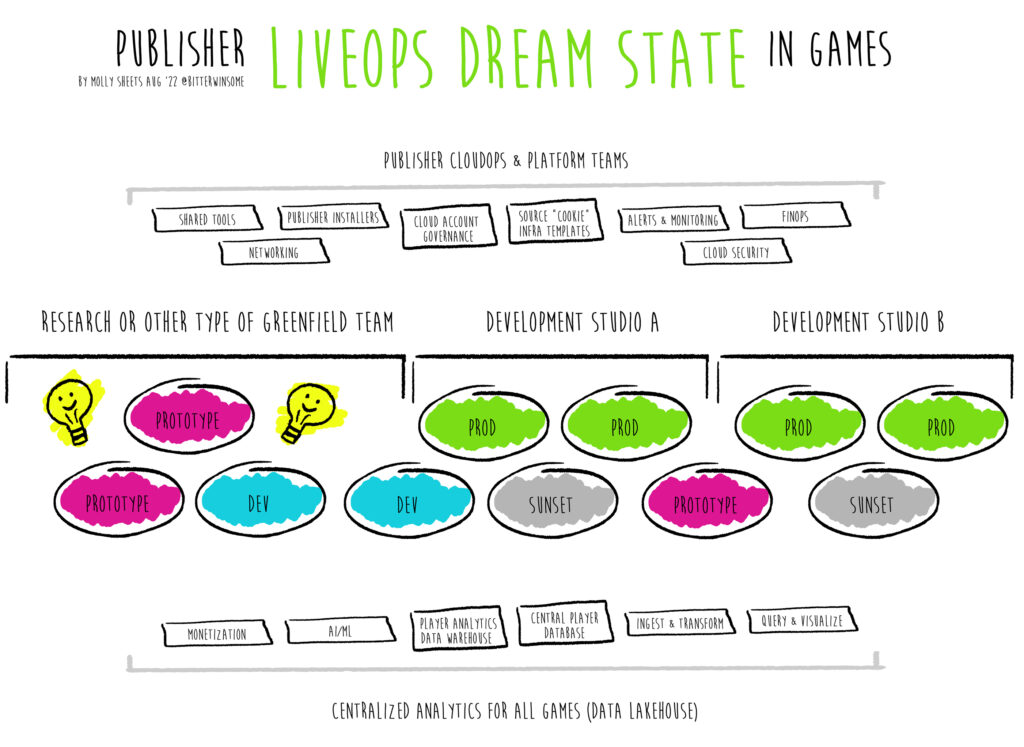

I hope in the architectural paradigm of microservices we strive for less clusters and more isolated nodes, isolated apps. It’s tempting to bring old models to new methodologies thinking that is what keeps an organization safe – if I have lots of environments that prevents outages, right? But what if we can have our cake and eat it to? We can have isolation without asking for that kind of maintenance pain?

Players are what matters. Transparency is what matters. Improving. Learning. This is what matters. We want to kick people offline in smaller pools a lot less. A whole lot less. But what is less? We talk a lot about it for applications – feature flags. But what about for infrastructure? For compute? Sometimes it feels the only solve is region models – active-active cluster models. Go big. Or Go home. What if that’s half true?

Is it more accounts and more clusters? Or is it less accounts and more clusters? Less accounts and less clusters and more…node pools?

Teams can have a Kubernetes cluster and pool hosts (nodes) based on organizational convention and application rather than design number of clusters and number of accounts based on application. This means the control plane and worker nodes can be upgraded based on organizational principles and desired testing blast radius. It means a team can route traffic and complete isolated testing with real users within the cluster itself. There are many advantages to having less clusters, but more so, isolated node pools. Node pools allow teams to have 1 Kubernetes cluster (for example a dev, stage, production environment), but constructs for segmentation for worker nodes that respond to actions from a control plane. But wait! Should we have dev, stage, and production accounts? Dev, stage, and production clusters? Dev, stage, and production pools? How should we do the networking! Wow! Who can tell me with confidence what is 100% the right way to do isolated testing!?

Literally no one. Isolation is a journey, not a destination that has to blend operational challenges against security. That said, I freaking love node pools so much. Like so very much. If you love them too DM me someday.

From Google’s GKE documentation on node pools: “You can create, upgrade, and delete node pools individually without affecting the whole cluster. You cannot configure a single node in a node pool; any configuration changes affect all nodes in the node pool.” In this case, if we want to test on 1 node in production we need to create a new node pool. That’s still better, and still testing in production with real traffic on a new compute type for example, than creating a new cluster that isn’t representative of the real world.

From the Microsoft’s AKS documentation: “In Azure Kubernetes Service (AKS), nodes of the same configuration are grouped together into node pools. These node pools contain the underlying VMs that run your applications. The initial number of nodes and their size (SKU) is defined when you create an AKS cluster, which creates a system node pool. To support applications that have different compute or storage demands, you can create additional user node pools. System node pools serve the primary purpose of hosting critical system pods such as CoreDNS and konnectivity. User node pools serve the primary purpose of hosting your application pods. However, application pods can be scheduled on system node pools if you wish to only have one pool in your AKS cluster. User node pools are where you place your application-specific pods. For example, use these additional user node pools to provide GPUs for compute-intensive applications, or access to high-performance SSD storage.” This further segments this idea of isolation and expands on it with varying type of node pools for compute usage.

From Amazon’s EKS documentation: “With Amazon EKS managed node groups, you don’t need to separately provision or register the Amazon EC2 instances that provide compute capacity to run your Kubernetes applications. You can create, automatically update, or terminate nodes for your cluster with a single operation. Node updates and terminations automatically drain nodes to ensure that your applications stay available.” Same idea, new cloud. EKS also supports self-managed node groups, “A cluster can contain several node groups. If each node group meets the previous requirements, the cluster can contain node groups that contain different instance types and host operating systems. Each node group can contain several nodes.”

This is an old blog on EKS Managed Node Groups by Raghav Tripathi, Michael Hausenblas, and Nathan Taber, but still a great one for the historics who prefer to…Dr Who themselves into the Tardis of infrastructure to look at trends of the past – the limits have changed. If you are reading from the future from when this was written, visit the Service Quotas page for the latest. When that blog was written in 2019 EKS could support “10 managed node groups per EKS cluster, each with a maximum of 100 nodes per node group.” As of writing, 3.5 years later, EKS clusters can have 30 node groups per cluster with 450 nodes per managed node groups. That’s a LOT OF NODE GROUPS for 1 cluster.

All 3 providers, what they are tackling is a move towards controlled isolation in production and rollouts while still allowing control across all hosts as well. Generally, Kubernetes itself continues to support the mantra of isolation. I like to tell people, K8s lets companies pretend as if they are a mini cloud provider for their teams because it does – if one uses it as if they are one. The old models do not fit this structure – one must assume, they are becoming part of the nirvana with hosts.

Node pools means cluster owners can isolate specific workloads to specific compute nodes. How many clusters a team has depends largely on the account isolation and environment principles a team desires to have – Kubernetes is the defacto model for isolated designs. I’d rather recommend 4 games, of similar usage pattern and shared dependencies, built by the same team using the same cluster with isolated compute node pools than see 4 different games and separate dev, stage, and production accounts for each (with clusters in each). If the games benefit from similar architectural backends and the limits work for them – design networking, number of clusters, node pools/groups, and namespaces around the shared constructs and testing closer to end users but with a smaller blast radius each time. This is coming from someone who was and still is also loving the idea of account isolation – I’m torn because I see the potential of shared resources in the same account while still knowing the struggle. “But Molly! This is not what the AWS Organizations whitepaper recommends! They say isolate by account workloads and environments for security” You are right – but truly, ask AWS – was the AWS Organizations whitepaper written with the knowledge of the challenges of game studios and also Kubernetes? Or was it a best size fits all approach regardless?

How much harder does it make to update or test anything, how much risk is involved in complexity, by isolating by account every single workload environment versus isolating via node pool convention for compute needs and then namespaces and RBAC for security? Teams are challenged by complexity – because it depends both on compute needs and access – I want to know how my production workload handles a brand new host type for example and I don’t want a new cluster to do that. These are the aspects architects must ask before locking in account structures and landing at, eventually, the nightmare that is (way more than) 300+ clusters while still a small number of nodes and little pooling. Security, risk, and isolation is a journey not a destination.

The worst thing we can do as users of a cloud provider is take a cloud whitepaper and say, “This is the way” when providers inform what they do based on what customers want. The single most important thing to understand about Kubernetes, is the constructs for which it is great – isolated use cases with the same control plane and the variety of configurations at disposal for isolation.

There are real reasons to get another cluster. Maybe a team is vastly different from another team. But if a team, a subsidiary, is one team, consider the paradigm of what that team is asking for within that world for devops. Truly think on it – what do you want to make similar? What do you want to make different? What access patterns do you want to support? How do you want to organize yourself around it? With great clusters comes great responsibility for the entire company.

Maybe what one team will decide is actually, we want one giant sandbox cluster that everyone new to Kubernetes can destroy at whim and learn the value of node pools before asking for a new one entirely.

Don’t say no to new clusters.

But always, take the time to ask, “What are we really trying to achieve?” It may prevent a business from landing at 300 clusters to upgrade with 6-10 nodes a piece (plus a few giant galaxies among them) for no reason other than “We never paused to think really, really big about the architecture because we goaled ourselves on more clusters. We didn’t figure out how to learn together that what we really needed, was to slow down and understand…as we adopted Kubernetes…the incredible promise of node pools.”

Image Credit: Nasa Pandora’s Cluster, “…A conglomeration of already-massive clusters of galaxies coming together to form a megacluster. The concentration of mass is so great that the fabric of spacetime is warped by gravity, creating an effect that makes the region of special interest to astronomers: a natural, super-magnifying glass called a “gravitational lens” that they can use to see very distant sources of light beyond the cluster that would otherwise be undetectable, even to Webb.” from the James Webb Space Telescope, Feb. 15th 2023. SCIENCE: NASA, ESA, CSA, Ivo Labbe (Swinburne), Rachel Bezanson (University of Pittsburgh) IMAGE PROCESSING: Alyssa Pagan (STScI)