“The Way it Was” by Coast Modern 🎵

Sept 18th was such a great day to wake up sick.

It was also a good reminder of how amazing our whole extended team is. Like really, really, really amazing. Alas, I am reminded this is a personal blog so I won’t expand deeply.

I wrote in a previous post “The Darkest Timeline” that I would cover in part 2 “the financial reasons [teams] should laugh during and after an incident.” This is that post. Thanks, universe.

Background Context

I’ve been annoying and verbose about this before. 😀

For example, when I said speaking poorly about ops is a great way to make sure no one wants to do it with “Yes, I Love Ops: Because We Do Not Fear Production” stating

How we talk about what we do is the message we send to the people we care about and who they will become.

I wrote in “SEV 1 Party: Prod Down. Bridge Up” an explanation of how we can’t avoid testing in production because we already are. 😀 😀

I touched on that vendors deploy constantly. They recognized that problems no longer come from change of deployments alone. They come from customer behavior patterns that can only be seen in production sometimes months after the deployment was staged.

A third party provider could push an update that while under normal conditions would be fine, but with the wrong conditions, could cause issues for which teams would never have been able to prepare if they had trusted a configuration to be resilient.

A SEV 1 caused by customers is similar to the step in The Darkest Timeline at the very top with “An ignored or misunderstood, or unseen issue…has existed for a while and no one noticed” with later “what actually happened was that months ago we rolled a bunch of dice and that’s why we’re here.”

Existential cringe.

This can only be beaten by those dependent on it by making everyone, quite literally all of us, everywhere, faster.

The Requirement of Change is Cultural, Not Technical

Infrastructure leaders realized 10 years ago, that we needed a new culture, namely #hugops, because infrastructure had gotten complex. That culture? Wants to push to production because the “change failure” rate goes down and some failure comes from bullshit we can’t control.

For example, when Charity Majors wrote about a Norwegian death-metal band becoming really popular and putting stress on Parse (later acquired by Facebook), as part of Observability Engineering (pg 30) – it’s not like they could tell the band to suck, stop playing, don’t do the concert livestream at the crack of dawn US time.

The early adopters of #hugops culture realized that to save money, people need to feel safe to speak openly and report what they saw was happening instead of hide it or never open an incident at all and it’s not enough to just say teams want that to feel safe to deploy often. Teams have to make epic (culture) changes. They realized they didn’t want to build “heroic fire fighter cultures” or be compliance villains on bridges – they didn’t want to bring incident mantra of the past to this future.

The better they got at it – the faster they wanted to deploy because the safer it made them, the more they learned, and the happier they became with each outage. They started to reward for it and recognize for it. Not rewarding for responding, but for failing. It gave their systems practice but it also gave their leaders a chance to practice their behavior – before, during, and after.

That behavior? Translates to dollars per second.

What Costs Teams Money: Preventing Deployments with Manual Approvals & Highly Critical Bridges

To expand on why laughing matters we first have to understand what costs development teams money in deep detail.

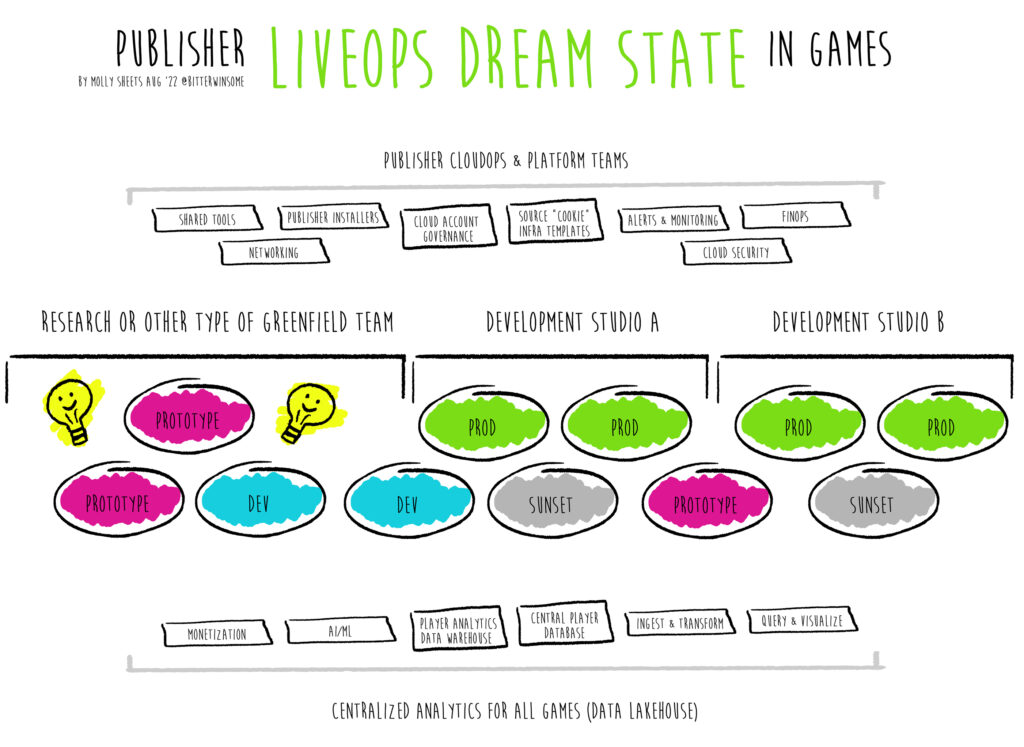

When we look at gitops, often teams try to run at it by looking at the technical solution first – For example, I wrote about Server CI/CD: Deployments for Microservices and cited GitOps with ArgoCD in Aug of ’22 before I joined Zynga. This was written as a reflection on the current state of games which still had a lot of process generally in the industry compared to other industries.

Deleting humans in loop deletes responsibility and puts accountability in the hands of the team, not the individual. I am again going to steal from Charity Major’s work with her interview in The New Stack where she says this is the ideal scenario for gitops:

May, 10th, 2022 by Jennifer Riggins, recapping Charity Major’s Recipe for High-Performing Teams keynote at WTF is SRE conference.

- Following peer review, an engineer merges a single change to the main,

- Hiding any user-visible changes behind feature flags, decoupling deploys from scheduled releases,

- Which triggers CI to run tests and build an artifact.

- This artifact is then deployed or canary released to production with no human gates. (There may be other automated gates or scheduling for release.)

This is also true for infrastructure changes (though perhaps slower than 15 minutes), not only applications, but more importantly is her bullet 4, which she explains further financially in her blog on “How much is your fear of continuous deployment costing you?” Because she is brilliant I am quoting that here too, but you should read the whole blog for a breakdown:

“Let (n) be the number of engineers it takes to efficiently build and run your product, assuming each set of changes will autodeploy individually in <15 min.

- If changes typically ship on the order of hours, you need 2(n).

- If changes ship on the order of days, you need 2(2(n)).

Your 6 person team with a consistent autodeploy loop would take 24 people to do the same amount of work, if it took days to deploy their changes. Your 10 person team that ships in weeks would need 80 people. At cost to the company of approx 200k per engineer, that’s $3.6 million in the first example and $14 million in the second example.”

Humans in loop are not autodeploy.

That’s not a technical challenge, it’s an adaptive one – and it may take months for those you need to agree with you, to be okay with everyone, deleting humans in loop and manual approvals (not just adding automation), never replacing with the same or similar process to meet feature parity, across many teams.

That’s okay if teams agree they want it and are beginning to understand the value – because it’s becoming more apparent severity is no longer the same as it was 5 years ago. How epic are our changes?

What Saves Teams Money: Laughter & Moving On

It is a good time for anyone in games to look back at the worst outage they’ve ever been in for the last 5 years and ask themselves “Could I have predicted this and truly planned for this any better?” and “Is it still the same?”

Re-read those lessons learned.

Make sure they were the right ones.

When I look at any timeline – be it a roadmap, a game’s launch, or an incident I ask myself, could this team have moved faster?

Or, given the information available to them, investment, and current processes (and practice) they had, did they do everything they could?

For incidents, the answer now is almost always: No, the RSVP to SEV 1 invites often couldn’t have happened faster during the incident given where they were that day and the processes and information available to the bridge. On that bridge teams could not have moved faster – because when your bridge is supportive or high performing, you’re probably already moving very fast and trying to move through discovery, mitigation, and recovery. For the most part that’s what I see today because I’m lucky, but your milage may vary. As I’ve said before, true SEV 1s are rare so you don’t get a lot of real practice.

I started laughing on bridges, or at minimum saying how awesome things were going, after I realized how much faster things moved when we rewarded ourselves for what we did or were doing well in real time instead of only focusing, or majorly focusing on things that were almost impossible to make any faster.

This made people feel safer to make live changes themselves, distributed the load. This paid down the recovery time – which translated to dollars/second.

You’d be surprised what simply saying “You’re doing a great job at comms” in real time can do on a bridge or “Wow, that was a genius idea – let’s do that” to how much a person feels safe to make the change they are about to make that may not even be a right change despite being a good idea because there is so much shit going on in a high severity incident. To continue to be amazed by how smart the people are around you and letting them know how f*cking great they are while they are in an absolute shitshow of epic proportions.

I can’t imagine how much better it would make me in that moment. I know.

So, as a hat tip to the broader industry, my math of Charity’s version…

Let (n) be the number of engineers it takes to be okay with making a change on a bridge in a world with high trust, autonomy, and transparency.

- If identification (discovery) typically takes on the order of seconds, because you have good observability, clear isolation and lines of ownership, you need 2(n).

- If identification (discovery) takes on the order of minutes, because there’s too many tools and missing information, you need 2(2(n)).

- If identification (discovery) takes on the order of an hour, because people feel unsafe to report something immediately due to it being tied to an external factor, due to accuracy or, worst case scenario, fear of discipline, you need 2(2(2(n))).

- If response (mitigation) typically takes on the order of seconds because of rapid deployments and application owners knowing their own workloads really well, you need 2(n).

- If response (mitigation) typically takes on the order of minutes because it requires SME expertise, application owners needing guidance, unclear on if they should make the change, you need 2(2(n)).

- If response (mitigation) typically takes on the order of hours because it has external dependencies, you need 2(2(2(n))) and to analyze the last 6 months.

A 3 person bridge becomes 24 people really fast and all their salaries with them in an incident.

This is separate from recovery, often a longer window in high severity incidents. We now know anger translates into increased dollars/second that affects not the present but the future.

We all want to be at 2n. That’s why you should laugh.

Maybe next time I will just show up in a clown suite.

Knowing that after an incident engineers won’t be punished for it is key to ensuring the incident recovery period starts as fast as possible at enterprise scale by paying down discovery and mitigation – that on it people feel safe to make changes without causing more problems.

Eventually teams get to, most of the team wants to own the retrospective, because they wanted to tell the tale about what they finally got to see, finally got to do when it mattered, bored out of their mind, that nothing exciting happens anymore – not because they don’t deploy fast or often enough – but because they do and for the most part, it’s okay – until customer behavior and adoption, or rather extreme success, is what truly causes a high severity incident they learn from.

If that has ever been you, nice job.

Image Credit: Please accept my SEV 1 Party invite made on meme generator.